Postgresql master-master replication with Bucardo

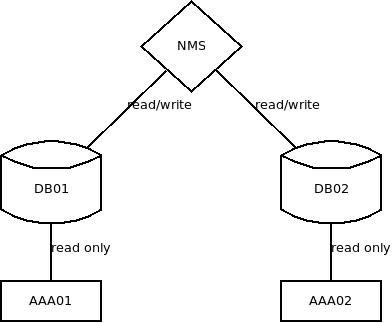

For a network I run a simple AAA setup that looks like this:

Each NAS-client knows of two radius servers (aaa01 and aaa02). If one goes down, they switch over to the other one. Each AAA server has its own database and aaarails as interface.

All connections go via VLANs, so accounting and graphing is done by looking at data from access router interfaces.

A NMS system is responsible for creating/updating/deleting customer profiles (via aaarails) and simply sends all write requests to both AAA servers.

This setup did run stable for a few years. As long as a NAS-client can get access-accept attributes back from any AAA server, the customer will have connectivity.

If one AAA server dies, we just setup a new system and let the NMS system repopulate the database while the NAS-client use the remaining AAA.

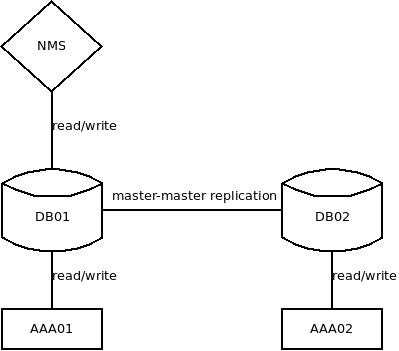

A new technology required some changes so that we also record accounting packets from the AAA servers.

In the old setup all AAA servers used their database read only.

In the new setup all AAA servers need write access to a database and read access is dependent on those writes.

With those new requirements in mind I've build a new system that is still pretty simple but also allows one AAA system to die without affecting customers.

Below you will find my notes about setting up Bucardo on a Debian 7.8 system for testing.

I used the bucardo version that was available in default Debian. This version only supports two masters and has some other limitations. Good enough for my use case, but perhaps you should consider to test the newest version.

My two test hosts

cat /etc/hosts 192.168.56.101 pg01 192.168.56.102 pg02

Install needed packages on both severs

apt-get install postgresql-9.1 bucardo postgresql-plperl sudo This will install two new users: bucardo:x:105:108:bucardo,,,:/var/lib/bucardo:/bin/bash postgres:x:106:109:PostgreSQL administrator,,,:/var/lib/postgresql:/bin/bash

On both severs: Create a 'deploy' user + password and prepare postgres for remote connections.

root@both_servers:/tmp# sudo -u postgres createuser deploy Shall the new role be a superuser? (y/n) y root@both_servers:/tmp# sudo -u postgres psql -d postgres -c "alter user deploy with password 'testing';" ALTER ROLE root@both_servers:/tmp# echo "host all deploy 192.168.56.0/30 md5" >> /etc/postgresql/9.1/main/pg_hba.conf # listen on interface edit /etc/postgresql/9.1/main/postgresql.conf listen_addresses = '*' # (please be more conservative in production than using * ) root@both_servers:/tmp# /etc/init.d/postgresql restart [ ok ] Restarting PostgreSQL 9.1 database server: main.

On both severs: Create the test database.

root@both_servers:/tmp# sudo -u deploy createdb radius

Test connectivity between the two systems:

root@pg01:/tmp# sudo -u deploy psql -h pg02 radius Password: psql (9.1.15) SSL connection (cipher: DHE-RSA-AES256-SHA, bits: 256) Type "help" for help. radius=# \q root@pg01:/tmp# # test other direction: root@pg02:/tmp# sudo -u deploy psql -h pg01 radius Password: psql (9.1.15) SSL connection (cipher: DHE-RSA-AES256-SHA, bits: 256) Type "help" for help. radius=# \q root@pg02:/tmp#

Create the full database on pg01 and the schema on pg02.

root@pg01:/tmp# sudo -u deploy pg_restore /tmp/radius.Fc -d radius root@pg01:/tmp# sudo -u deploy pg_dump --schema-only radius | psql -U deploy -h pg02 -d radius Password for user deploy: SET

Install bucardo on both servers:

root@pg01:/tmp# sudo -u postgres createuser bucardo Shall the new role be a superuser? (y/n) y root@pg01:/tmp# sudo -u postgres psql -d postgres -c "alter user bucardo with password 'bucardo';" ALTER ROLE bucardo install # use the deploy user

I run bucardo on aaa01 and keep it ready on aaa02. This configuration is only done on aaa01:

bucardo add db aaa01_radius dbname=radius host=pg01 user=deploy pass=testing bucardo add db aaa02_radius dbname=radius host=pg02 user=deploy pass=testing bucardo add all tables bucardo add all sequences bucardo add herd aaaherd public.nasclients public.radaccts public.radchecks .... bucardo add dbgroup myaaadbs aaa01_radius:source aaa02_radius:source bucardo add sync mysync herd=aaaherd dbs=myaaadbs status=active bucardo stop

Make initial sync (only data, exclude the bucardo schema and make sure no triggers do run on the other side):

root@pg01:/tmp# sudo -u deploy pg_dump radius --data-only -N bucardo | PGOPTIONS='-c session_replication_role=replica' psql -h pg02 -U deploy -d radius -f -

Start bucardo to apply changes that happened on aaa01 during the initial sync:

bucardo start

At this point, both databases should be in sync. You can check the status:

root@pg01:~# bucardo status PID of Bucardo MCP: 4065 Name State Last good Time Last I/D Last bad Time ========+========+=======================+=============+===========+===========+======= mysync | Good | Apr 28, 2015 05:30:32 | 38h 37m 46s | 935/936 | none |

But what if the replication breaks (network down) and a NAS-client starts to switch between two radius servers back and forward?

Bucardo follows the strategy that if two values are referenced by the same id, the newest one wins.

So data-loss is actually a valid concern and requires some modification to how sequence numbers are generated for the affected tables.

The NMS only writes into one master, so this part is not a concern.

Freeradius only writes into the accounting table (radaccts).

It is required to remove the sequence for this table from bucardo and then modify the way the sequences are generated, to have a non overlapping pattern.

The sequence in question is called 'radaccts_id_seq'.

First remove it from bucardo:

In bucardo version 4 removing a sequence is not implemented correctly, but you can use the remove table command which does the job.

root@pg01:~# bucardo remove table public.radaccts_id_seq Removed the following tables: public.radaccts_id_seqAt this point, the content of the table radaccts is still synced, but the sequences on the two machines now do run independent.

To avoid a collision of sequence numbers, make one server use only even numbers, and the other server only use odd numbers:

# on the first database: radius=# ALTER SEQUENCE radchecks_id_seq INCREMENT BY 2; # check if odd or even: radius=# \d+ radchecks_id_seq; # on the second database: # check if odd or even: radius=# \d+ radchecks_id_seq; # modify next value if not correct value # or insert a dummy value to increase sequence number by one radius=# ALTER SEQUENCE radchecks_id_seq INCREMENT BY 2; # verify the result radius=# \d+ radchecks_id_seq;

Hope that does make some sense to you.

Questions, suggestions or feedback? Please let me know.

Talk to me

IT-Dienstleistungen

Drostestrasse 3

53819 Neunkirchen

Germany

USt-Id-Nr.: DE203610693

web: https://beastiebytes.com/

email: sven@beastiebytes.com

skype: sven2342

phone: +49 22 47 90 80 250

mobile/signal: +49 157 3131 4424

xing,

google+

OTR-Fingerprint: 7849BD93B65F9E4BC1206B06C09B7445721063BC

GPG/PGP-Key: (pub 4096R/069DD13C 2014-02-13) local copy pgp.mit.edu

GPG/PGP-Key: fingerprint: 9BAD 94D3 9176 5BD1 F64F 542E 37E4 3542 069D D13C